Suggested

12 Best Document Data Extraction Software in 2025 (Paid & Free)

Data is pivotal in shaping business strategies, optimizing operations, and driving growth. An infographic report by Raconteur estimates that by 2025, the world will generate a staggering 463 exabytes of data daily.

Effective data tools are crucial for businesses to stay competitive amid exponential growth, enabling them to unlock valuable insights and gain a strategic marketplace advantage.

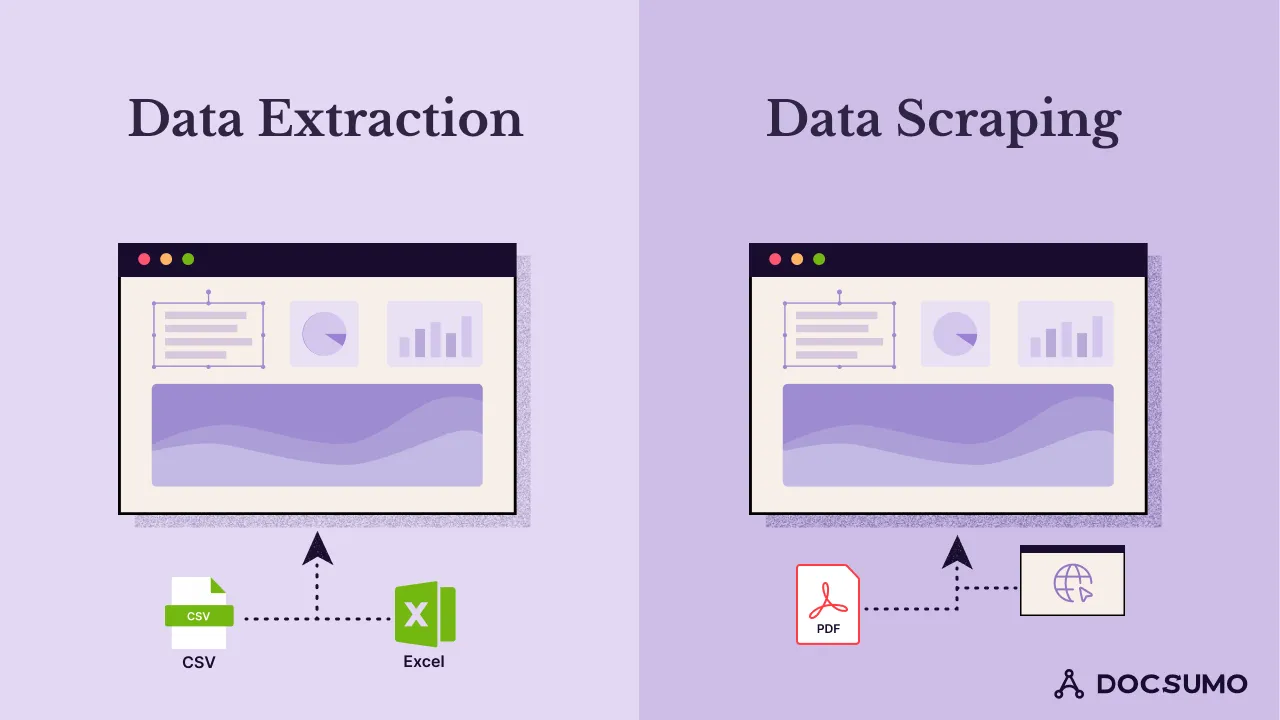

The two standard methods for acquiring data are data extraction and data scraping. Understanding the nuances between these techniques is essential for efficient data management and decision-making processes.

To choose the right approach, consider the pros and cons of both data scraping vs data extraction. Let's explore the intricacies of data extraction and data scraping and their significance in the ever-changing realm of data management.

Data extraction involves retrieving specific information from structured sources such as databases, APIs, or formatted documents. Data extraction aims to select and pull relevant data systematically and organizationally.

This process typically requires interaction with structured sources through established protocols or APIs, making it more suitable for accessing well-defined data formats. Data extraction is commonly used for migration, integration, or exporting data into other systems.

Data extraction enables businesses to access and utilize data efficiently, improving decision-making and operational efficiency.

Automated tools such as OCR and Intelligent Data Extraction software further streamline this process by quickly and accurately extracting and processing data from various sources.

Some of the well-known OCR tools for data extraction are:

These tools reduce manual effort, minimize errors, and accelerate data-driven insights, empowering businesses to stay competitive.

Data scraping is the process of extracting information from unstructured or semi-structured sources such as websites, HTML pages, or PDF documents. Unlike data extraction, data scraping primarily targets unstructured sources that lack a predefined format or protocol.

Data scraping involves using web scraping tools and techniques to extract valuable information from websites by parsing the HTML code. This approach is commonly used for web research, competitor analysis, or data gathering for machine learning models.

Below are some real-world applications across various industries:

Incorporating data scraping in these ways streamlines data collection and analysis and opens up new avenues for innovation and strategic planning across different sectors.

Distinguishing between data extraction and data scraping is crucial for selecting the most suitable method for your requirements. Here's a detailed comparison:

It focuses on retrieving specific information from structured sources like databases and APIs. Some everyday use cases include data migration, integration, and exporting data to other systems.

It targets unstructured or semi-structured sources like websites, HTML pages, or PDFs. It is used for web research, competitor analysis, and data gathering for machine learning models.

It interacts with structured sources in predefined formats, making data location and extraction easier.

It deals with unstructured or semi-structured sources (websites) with inconsistent formats. It requires parsing HTML code and handling dynamic web pages.

It utilizes established protocols or APIs for retrieval. This can be accomplished through direct database queries, connecting to an API, or by parsing formatted documents.

It employs web scraping tools and techniques to parse HTML code and extract data. It has libraries like Scrapy, Selenium, etc. that can automate the process.

It uses tools tailored to the data source, such as database query tools, API integration tools, or document parsing libraries.

It utilizes web scraping tools and frameworks like Selenium or custom scripts to automate, handle dynamic pages, and parse HTML code.

It deals with structured data sources with predefined formats, ensuring consistency and ease of extraction.

It deals with unstructured or semi-structured sources with variable layouts and data structures. It requires flexibility in identifying patterns and extracting data elements. Using a screenshot API while web scraping can be helpful in extracting data from sources with variable layouts.

It is known for its straightforwardness, especially when dealing with structured sources. Automation and adherence to standardized formats improve data extraction's efficiency and reliability.

Data scraping can be more complex because of tasks such as parsing HTML, managing dynamic pages, and adapting to potential website layout changes. Advanced data techniques are essential for identifying elements accurately and maintaining data integrity during scraping. The complexity of data scraping escalates with Javascript interactions or the need for login credentials.

It is well-suited for large-scale extraction from structured sources. Automation and defined formats enable efficient handling of large data volumes.

Data scraping's scalability depends on website complexity and resources. Automation can be slower with complex websites or large data volumes, and legal concerns around scraping large amounts of data also arise.

Generally legal when using authorized sources and APIs. Compliance with regulations and terms of service is crucial. Permission and adherence to source rules are essential.

This may raise legal and ethical concerns, especially if it is done without permission or violates terms of service. Compliance with legal frameworks and avoiding copyright infringement or violations of the CFAA are essential. Obtaining authorization from website owners and adhering to their terms of service ensures legal scraping activities.

Data extraction and data scraping serve distinct purposes. Data extraction excels at retrieving data from organized sources, while data scraping tackles the challenge of extracting information from messy, unstructured sources.

Understanding these differences empowers you to select the best approach for your data acquisition needs.

The right approach between data extraction and data scraping is crucial for effective data management. Each method offers distinct strengths, and the optimal choice depends on your project's specific needs. Here's a breakdown of key factors to consider:

Understanding the strengths and considerations of data extraction and scraping can help you decide the best approach for your specific data management needs.

Data management evolves to meet the demands of a data-driven business landscape. It relies on data extraction from structured sources and data scraping from unstructured sources, crucial for navigating vast amounts of information.

Advances in artificial intelligence, machine learning, and natural language processing offer tools for extracting meaningful insights. The synergy of optical character recognition (OCR) and Intelligent Data Extraction enhances the precision and efficiency of data extraction from physical and digital formats.

Docsumo emerges as a leading solution in this space, propelled by its AI-driven capabilities. It distinguishes itself by:

In short, Docsumo helps businesses extract data more efficiently, improving operations and enabling smarter, data-driven decisions.

Get the free trial of Docsumo now.

Data scraping is typically more effective than data extraction when gathering information from web pages or unstructured online sources. Data scraping allows you to collect data from various websites and platforms, such as competitor pricing data or customer reviews. It is beneficial when dealing with constantly changing or dynamic data sources, as data extraction may not be able to keep up with the updates in real-time

Data extraction tools are primarily designed to extract data from structured or semi-structured sources such as databases, documents, or spreadsheets. While these tools can handle web data to some extent, they are typically not as efficient as data scraping tools when extracting data from web pages. Data scraping tools are specifically designed for web scraping. They allow businesses to gather data directly from websites, including unstructured online sources.

When scraping web data, it is crucial to ensure compliance with data privacy laws, such as the General Data Protection Regulation (GDPR) or the California Consumer Privacy Act (CCPA), to protect the privacy of individuals. To ensure compliance, consider the following steps: Familiarize yourself with the data protection laws in your jurisdiction and the jurisdiction of the websites you are scraping. Obtain proper consent if required before scraping any personal data from websites. Anonymize or aggregate data whenever possible to avoid collecting personally identifiable information. Respect any website's terms of service or usage policies regarding web scraping. Some may explicitly prohibit scraping or impose restrictions on data usage. Consult with legal professionals to ensure compliance with relevant data protection laws and regulations. Prioritizing privacy and obtaining necessary permissions is always advisable before scraping personal or sensitive data from websites.